Introduction

Prior

to this lab each group was to create a terrain inside of a sandbox of about one

square meter (Figure 1), and then tasked to come up with a sampling scheme and technique

for the creation of a digital elevation surface. Due to the small size of the

study area, the grid scheme consisted of 6 X 6 cm squares and systematic point

sampling was used to gather elevation data from the X, Y intersections of each

square. In cases of sharp change in elevation within one square two

measurements were taken in one square, giving the X or Y a 0.5 decimal place in

the coordinating location. Data was manually recorded on a hand drawn grid

matching that of the one created over the sandbox. This method organized the

data and assisted in data normalization as it was entered into an excel

spreadsheet. Data normalization involves the input of data into a database so

that all related data is stored together, without any redundancy. Data for this

excel file was set to numeric, double to indicate the decimal values and keep

all data in the same format. X, Y, and Z data for each grid was entered

beginning at the first X column from bottom to top and so forth, this pattern

eliminated redundancy and kept all data inside one excel file. In total; 434

data points were collected in a relatively uniform pattern throughout the

entire study area, with some clustering of points in areas with rapid change in

elevation. These data points can be imported into ArcGIS or Arc Scene, where

interpolation can mathematically create a visual of the entire terrain based on

the know values.

|

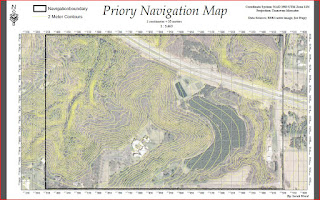

| Figure 1 |

Methods

To bring this process a

geodatabase was created and the excel file, which has been set to a numerical

data format, is imported into the database, brought into ArcMap as X, Y data,

and then converted into a point feature class. After a point feature class has

been made the data can be used to create a continuous surface using

interpolation methods. Four raster interpolations and one done in a vector

environment are experimented with to determine the ideal representation of the

terrain. The four raster operations are: Inverse Distance Weighted (IDW),

natural neighbors, kriging, and spline. The interpolation done in a vector

environment is called TIN.

IDW and spline methods are

deterministic methods, they are based directly on a set of surrounding points

or mathematical calculation to produce a smooth continuous surface. IDW

interpolation averages the values of known points in the area of the cells

being calculated to give them values based on proximity to the know values;

cells closer to known points are more influenced by the average than those

farther away. Influence of known points can be controlled by defining power, a

larger power will place more emphasis on near points and result in a more

detailed map, while a lower power will place emphasis on distant points as well

to produce a smoother map. Produced values can also be varied by manipulation

of the search radius to control for distance of points from the cell being

calculated. The IDW interpolation method would not be advantageous for random

sampling methods, areas with a higher density of samples would be more well

represented than areas with fewer points. Spline uses a mathematical function

and the resulting surface passes exactly through each sample point, making it

ideal an ideal method for large samples. The function used creates a smooth

transition from one point to the next and is ideal for interpolation of

gradually changing surfaces such as; elevation, rain fall levels, and pollution

concentration. Since this method passes through each sample point to create the

resulting surface, it is not ideal for sampling techniques that result in

clustered areas or fewer sample points in proportion to the entire area, such

as random and/or stratified.

Kriging is a multi-step,

geostatistical method of interpolation that creates a prediction of a surface

based on sample points and also produce a measure of accuracy of those

predictions. It assumes a correlation between points based on distance and

direction between them. Kriging is similar to IDW in the fact that it weights

points in a certain vicinity but it goes beyond IDW and takes spatial

arrangement of the sample points into account, in a process called variography.

While kriging goes a step beyond IDW to create a more accurate portrayal of a

surface, like IDW it is not an advantageous method for sampling techniques that

have resulted in clusters of sample points, such as random sampling.

Natural neighbor interpolation

utilizes a subdivision of known points closest to the unknown point to

interpolate and does not deduce patterns from the subdivision data. Hence if

the entered sample does not indicate the pattern of a ridge, valley, etc.; it

will not create this feature. Natural neighbor interpolation is advantageous

for samples that have been collected using a stratified sampling method due to

subdivisions within the study area. However

random sampling may result in poor representation of a specific surface and

thus natural neighbor would produce a poor representation of the surface as

well.

TIN, or triangulated irregular

network, uses an algorithm to select sample points in the form triangles and

then creates circles around each triangle throughout the entire surface. The

intersections of these are used to create a series of the smallest possible,

non-overlapping triangles. The triangle`s edges cause discontinuous slopes

throughout the produced surface, which may result in a jagged appearance and

TIN is not an advisable interpolation method for areas away from sample points.

Given the comprehensive and uniform

collection of sample points in the terrain, each of these methods should

produce a relatively accurate portrayal of the terrain. However, the areas with

more densely collected data may result in over-representation in those areas in

all interpolation methods except spline. Because of the fact that spline

interpolation passes the surface though each data point and produces a smoothed

surface in-between the points. Further reading in ArcGIS help indicates that

spline is also ideal for areas with large numbers of sample points and

gradually changing surfaces, like elevation. This indicated it would be the

most ideal method for the survey.

Results/Discussion

Prior to this activity, it

was necessary to create a sandbox terrain and spatially sample it for elevation

values. The entire terrain was slightly larger than one square meter and a

systematic point sampling of the entire terrain was feasible. Systematic sampling

done within an X, Y plane consisting of 361, 6

2 centimeters, quadrates

resulted in an excel spreadsheet with 434 data points (

Link to excel file).

After the excel file was imported into ArcGIS as X, Y data and converted into a

point feature class (Figure 2A), it produced a collection of sample points that

were uniform throughout most of the terrain, with areas of sharp change in

elevation more densely sampled. Each of the five interpolation methods

described above were applied to the feature class to create different

continuous elevation surfaces and each analyzed for best fit for the survey.

For each method, the elevation from

surfaces option was set to floating on a custom surface with the custom surface

being set to the corresponding surface being interpolated and each was set to

shade areal features relative to the scene`s light position. Furthermore, other

than the TIN method, each scene was set to a stretched symbology with the same

color ramp. The TIN method only offers elevation symbology and did not offer

the same color ramp. All First, IDW

resulted in elevation changes that were relatively smooth and accurate but the

image appeared “dimply”, similar to that of a zoomed in view of a golf ball in

almost the entire image (Figure 3A). Kriging also accurately represented

elevation, but it appeared very geometric. It would most likely appear similar

to a fractal if each shape within the image were colored in differently (Figure 3B). The natural neighbor method is smooth in most places, but edges are rough

and smaller elevation changes are less pronounced (Figure 3C). TIN

interpolation produced a very detailed image, however it is very “blocky” with

angular curvature instead of a smooth surface appearance (Figure 3D). Finally,

the spline interpolation method produced smooth elevation transitions, with the

most pronounced representation of different elevations throughout the terrain. Based

on these results, the spline method appeared to be the interpolation most

appropriate for the survey taken (Figure 2B).

Before applying the interpolation

methods and having only researched sampling techniques and some about the first

geography law; it seemed the survey performed may have been slightly excessive.

However, on the other hand, a larger survey is ideal and the time taken to

perform the survey was not all that long. After experimenting with and reading

about each interpolation method, it can be concluded that the sampling

technique and grid scheme was not too excessive. The results from each

interpolation method captured most details of the original terrain, and it was

clear what was being portrayed in each image.

|

| Figure 2 |

|

| Figure 3 |

Summary/Conclusions

Field based surveys collect

essential spatial data needed to determine the relative data of unknown areas

within proximity to the collected data, in order to establish an acceptable

representation of the entire study area. This activity illustrated the basics

of the survey process on a much smaller scale than usual. Like this survey,

surveyors need to decide which sampling technique and tools are most suitable

for the task. However, the tools used in most field-based studies are go beyond

string and thumbtacks, some of the tools used include GPS receivers, total

stations, 3-D scanners, and UAVs. In larger survey areas, surveyors must also

consider temporal aspects. If a survey is to be done as a follow up to a

previous one, the surveyors need to consider whether it should be done in the

same temporal situation as the previous survey. Alternatively, if a new survey

is to be conducted, the surveyors need to determine the best temporal situation

for the survey. The detail given to this small scale survey is not always a

feasible option, resulting in a different collection of sample points. This is

where the other interpolations would yield a more accurate representation of

the survey in question.

Interpolation

is a useful tool for visualization of many things beyond elevation. Other

gradually changing phenomena’s such as water table levels and rain fall can be

interpolated or demographic data, such as a survey of HIV distribution in

Africa. Ecological surveys, such as forest type could also be interpolated. Interpolation

methods are crucial in many fields for the extraction of important information

otherwise extremely difficult or impossible to survey completely.